“I’m not so interested in LLMs anymore,” — Yann LeCun, Meta’s Chief AI Scientist

My interest in AI goes back to my childhood, to the very roots of my interest in technology. Robots, gadgets, and video games propelled me into a career as a programmer spanning decades. I've spent several years teaching introductory AI/ML at a college level, developing a curriculum to keep up with current developments. I've been relatively silent about generative AI despite my interest. It’s not because I'm on hiatus. I've not posted about it online, nor discussed it much with colleagues or friends. What little discussion I’ve had about it has been in search of catharsis, with therapists and family. It's been hard for me to respond to the frothing hysterics that have permeated nearly every form of media that I consume. Bold declarations about how AI may alter our world have grown into an incoherent cacophony of panic, fear, greed, and confused desperation. Like many, I’ve ridden the rollercoaster that is the question "will I be replaced by AI?" I finally felt that I could compose a calm response. Should anyone find fault with this post, I am ready to hear it and I will learn what I can from your reaction.

Frustration & the AI boom

My experience has been one of frustration, if not exasperation. It was just a few years ago that I was teaching my undergrad students about past "AI winters", periods where rapid adoption and investment in AI was followed by abandonment and derision by industry and the public. The boom-and-bust modern history of AI is very much a cautionary tale about over-optimism during economic development of new industries. Before LLMs were big, for years, I had intended to go deeper into AI, potentially even committing my remaining working years to the academic field. Yet, I was struck dumb by the tsunami brought about by the public success of LLMs. I suddenly felt alienated by a field I had considered devoting myself to. When I first uttered the words “generative AI”, years ago, before hearing about the actual field, I thought I was just imagining a new way to make video game content. Why have I come to feel like I’m saying a dirty word?

Technical concerns

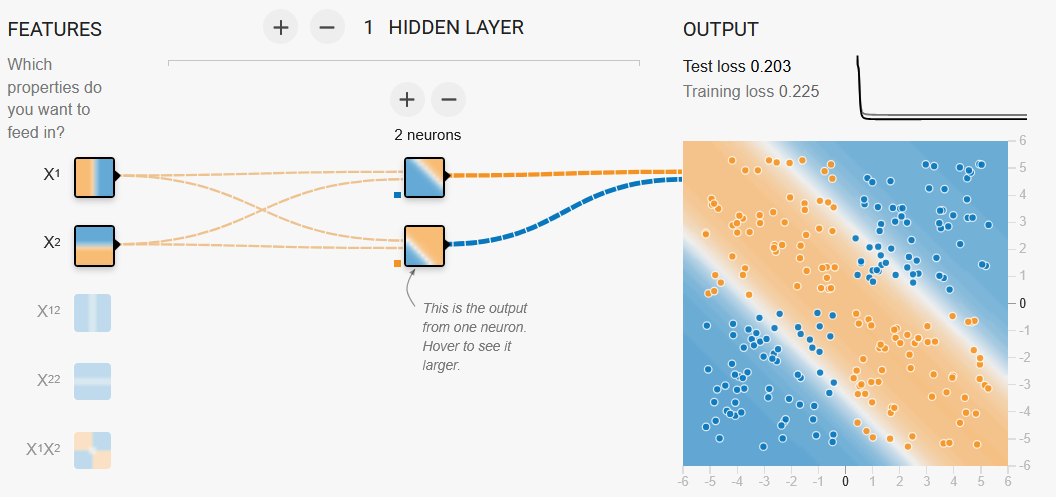

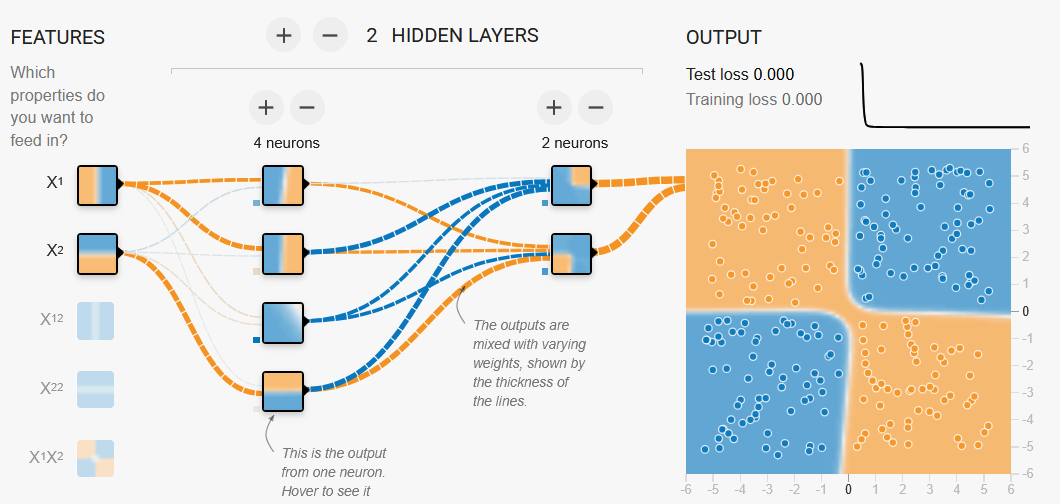

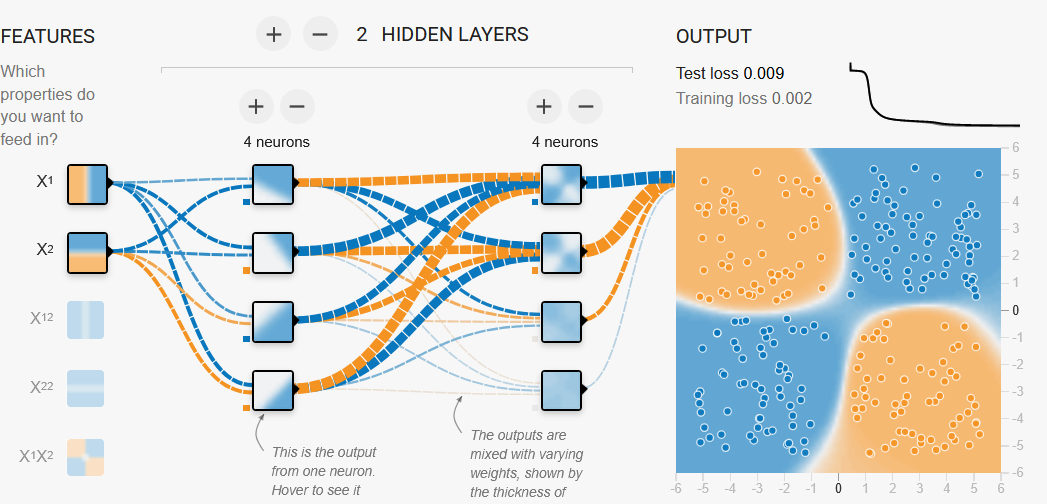

I need to get a bit technical. The heart of my discomfort is the fundamental axiom I taught my students. Neural networks, the technology underpinning ChatGPT, Gemini, and other LLM models, are approximations of mathematical functions. In math, or in code, a function takes an input and gives an output. The function does some useful work such as calculation. Ideally, for purposes of building tools, they are idempotent meaning the output is consistent with the input. These models are optimized to solve very specific problems, and it is not retroactively clear how they do it, since the functions approximated by the network are not visible and it is impractical to explore the state space of a approximated continuous function. Furthermore, unlike logically defined functions, they are not idempotent, meaning the output is unreliable. The brittle nature of neural networks becomes very intuitive when you realize that to model a certain function you can add layers of neurons, each a set of simple decision makers, until the model fits the function given training. Yet even a well-fitting network has blind spots where the function fails. Too few neurons and layers, and you will under-fit. Too many layers and neurons and you will over-fit. Over-fitting and under-fitting will both result in false positives and negatives. A well-fitting model is only accurate as long as the rules and data types are static. The physical world is far more dynamic. This kind of hands-on experience with neural networks helps one deeply understand their strengths and limitations.

Underfitting with 1 layer and 2 neurons

Fitting well, with 2 layer and 6 neurons

Overfitting with 2 layers and 8 neurons

Here we're struggling to fit simple 2 dimensional data. Imagine the fitting problems that arise with very high dimensional data requiring the articulation of millions of features. Plainly, a model is only as good as the training data. This fundamental facet of AI modeling has always been a hard truth, and it remains so. Hallucinations and failures to solve simple logical problems demonstrate the inherent flaws, propagated from imperfect data. While the model may make surprising connections between distant concepts that are represented in the training corpus, there is no guarantee that the corpus has accurately represented the underlying concepts or the relationships between them. In short, errors are fundamental to AI models and are particularly pervasive and concerning in language models where the training data spans manga comics all the way to falsified scientific journal papers and Nazi propaganda. As users anthropomorphize the natural language interface, they grow to trust what they are told. It's not a far fetch to think millions of people will soon form closer relationships to LLMs than they do to other humans. If we could explain why LLMs say the things they do, perhaps it would protect us from their mistakes, or from malicious content seeded in their training corpus, but nobody has figured out how. It’s not even clear that an explanation would help, as it might well shatter our confidence upon seeing clearly how unhinged from reality their functioning is.

On AGI predictions & societal risks

When pundits like Sam Altman predict imminent AGI (artificial general intelligence), they speak of these surprising abstractions formulated in the layers of the network that can reason about problems not directly represented in the source data. This so-called reasoning can only ever be as intelligent as the input data. The data can only ever be an arbitrary sampling of the world. LLMs represent a quantum leap in natural language search and retrieval, making accessible virtually all data available on the internet, with the ability to generalize and remix that data. In areas of accuracy, reliability, reasoning, and problem solving, LLMs may have exceeded their reach, opening a pandora’s box. Environmental havoc, intellectual property theft, psychological and intellectual dependency, propagation of AI-slop and misinformation and propaganda, economic and existential panic. That’s just off the top of my head. The opportunistic amplification of signals with negative societal effects, and lack of consideration for unintended consequences, mirrors the troubled evolution of cryptocurrency, another technology that I’ve long distanced myself from due to similar ethical quandaries. LLMs are not capable of generally improving upon people, because they are unreliable and error prone, unable to reason, by nature. Certainly, LLMs and agentic systems that use them create economic value, but after the societal cost, what is net value? I suspect nobody will ever answer this question.

Perhaps the most wrenching aspect of the media circus, for me, is the discussion about replacing most of the workforce without any public discourse about how to save the economy from collapse once those people are unemployed. The government is silent on that topic, as is industry. Some optimistically opine that jobs will be created, but nobody can say what those jobs will be. AI pundits, feigning vulnerability, tell us about how the dangers of AI keep them awake at night, and then increase their marketing budgets and plan mass layoffs. How cynical an idea, to replace humans with lesser performers, only to bring about the ruin of society, or at least, the ruin of the working class. The idea that generative AI will exceed the creative potential of humans is passed off as a given, yet there is no evidence for it. AI generated media is riddled with inconsistencies and devoid of inspiration. It galls me that the derivative nature of generative AI and the limits that such regurgitation places on us when we depend on it, is not recognized. Even if the plan to replace human workers succeeds, are we expected to hope that the few who will control this immense wealth and power will share it out of goodwill? This performative posturing by corporate and government leaders reeks of class warfare, nihilistic politicking, and the malignant absence of compassion and imagination. My plea as it were, to those working on the adoption of these technologies, is to rise above this gross insensitivity and very critically assess where these tools could and should be used to minimize harm.

LeCun, winters, and what’s next

Let’s revisit Yann LeCun’s very quotable soundbite up top. LLMs started this latest AI gold rush, and now that gold rush threatens to become another AI winter. Sunk cost fallacy ensures that leaders betting on generative AI replacing humans will continue to place bets. Corporations benefiting from the AI craze are pushing the status quo, and the fantasy of imminent AGI. The alternative is acknowledgment of a new AI winter, with the threat of harsh pushbacks from investors, consumers, governments, and even voters. With LLMs having smashed against the brick wall of reality, scientists like LeCun are leveraging the investment environment to pour money into entirely new fields of AI research. They’re not being coy, they’re saying straight to our faces that replacing humans and creating AGI is still just a fantasy, for all the reasons I laid out. There’s time-limited opportunity before the world catches on to the stark limitations of LLMs, to use current investment momentum for new ideas. Like squirrels storing acorns, researchers are fattening up while they can. It’s clear that AI research has the potential to benefit us all. The question is whether we’ll create AI that affirms the best of humanity, without causing irreparable harm, before next winter. As painful as that winter may be, when it comes, it will be reassuring to know that basic science remains unassailable while hubris and greed come and go with each passing season. I will be able to think about AI once again without dread.

Closing thoughts

Finally, I do believe AI will play an important role in the evolution of our world. It may, over time, fundamentally alter our minds and bodies, challenging the essential notion of what it means to be human. There was a time when I was much younger, and I called myself a transhumanist and I talked excitedly about the singularity. A luddite, I am not. Age makes me more cautious. While it feels inevitable that we may collectively go on this journey, the haste and mood of our current trajectory is one largely of greed and contempt, aiming to benefit a few. It falls to all who make decisions now and in future generations to include kindness and compassion in our itinerary, and it falls to all of us to support those would act responsibly and with integrity towards fellow humans.

I recommend this article based on interviews with Yann LeCun, one of the “godfathers of AI”, to further understand the state of the art of AI and where it is headed:

Newsweek: AI impact — interview with Yann LeCun

For the video in which LeCun shares the quoted soundbite, see:

YouTube — Yann LeCun interview / clip